Your cart is currently empty!

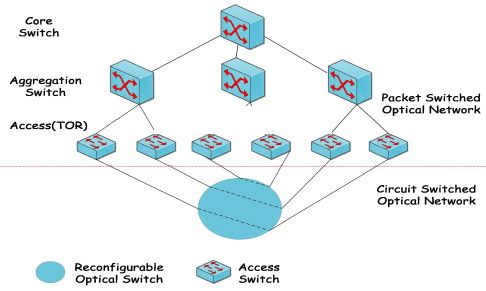

Aggregation Switch vs Core Switch Short answer for searchers: in a standard three-tier campus LAN, the aggregation (distribution) layer concentrates access traffic and enforces policy, while the core layer is a fast, highly available, low-latency backbone. Keep policy at aggregation; keep the core clean and quick.

Three-Tier Campus at a Glance

Access connects endpoints and handles PoE/authentication. Aggregation is the inter-VLAN routing and policy hub. Core provides high-reliability, high-speed transit between aggregation blocks and to the data center/edge.

Difference 1: Roles and Responsibilities

Core: minimal features, maximum reliability and speed. Prioritize fast reroute/ECMP, modular redundancy, and deterministic paths. Avoid spanning-tree dynamics in the core whenever possible.

Aggregation: the policy workhorse. Terminate VLANs/SVIs, perform inter-VLAN routing, apply ACLs and QoS, and often run segmentation (VRF or VXLAN). Default gateways live here; uplinks go northbound to the core.

Difference 2: Performance & Sizing (How to Read the Specs)

- Port mix:

Aggregation typically concentrates many 1/10/25G downlinks and uplifts with 40/100G. Core leans toward high-count 100/400G line-rate ports. - Throughput / fabric capacity:

Backplane (switching capacity) should be greater than or equal to the sum of port line-rates. Treat marketing numbers cautiously—look for per-ASIC details when available. - Packets per second (pps):

For conservative sizing with 64-byte frames (including overhead), a handy rule is:

pps ≈ bandwidth(Gbps) × 10^9 ÷ 672.

Use it to sanity-check whether the box can genuinely push line rate. - Buffers and latency:

Core targets microsecond-level latency and stable micro-burst absorption. Aggregation values richer QoS, shaping, and flexible buffering to handle varied user/application traffic. - Table capacities:

Aggregation cares about ACL TCAM, QoS queues, and policy terms. Core cares about FIB size, routing scale, ECMP paths, and MAC/ARP stability. - Oversubscription:

Aggregation aggregates many access switches and often runs N:1 oversubscription (e.g., 240G down to 100G up ≈ 2.4:1). The core carries site-wide load—keep oversubscription low, ideally near 1:1.

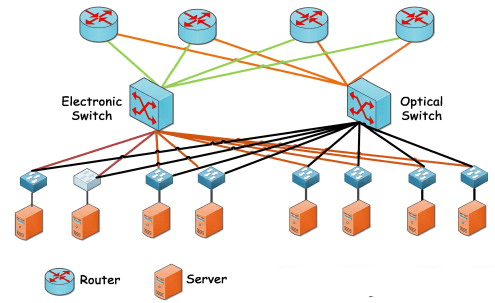

Difference 3: Reliability & Architecture

Core: design out single points from day one—dual supervisors/PSUs/fans, ISSU/NSF/SSO for hitless upgrades, sub-second convergence with IGP + ECMP. Chassis or high-end fixed with virtualization is common.

Aggregation: redundant yet change-friendly. Stacking/MC-LAG/VSS-like designs eliminate L2 loops and enable cross-box LAG. VXLAN-EVPN often terminates or bridges here. Keep policy churn from bubbling up into the core.

Practical topology tip: prefer L3 point-to-point links between aggregation and core (IGP + ECMP). Collapse L2 domains below aggregation to speed up convergence and contain faults.

Difference 4: Features & Operations

Aggregation does: identity-based access (802.1X/MAB), inter-VLAN routing (SVIs), ACL/QoS for voice/video/VDI, segmentation (VRF/VXLAN), frequent policy changes.

Core does: fast, simple routing (summarization, ECMP) and minimal policy. It changes rarely and follows a strict baseline.

Aggregation vs Core — At-a-Glance Comparison

|

Aspect |

Aggregation (Distribution) |

Core |

|---|---|---|

|

Primary role |

Policy hub, inter-VLAN routing, segmentation |

High-speed, low-latency transit backbone |

|

Typical ports |

Many 1/10/25G down; 40/100G up |

Line-rate 100/400G density |

|

Routing focus |

SVIs, ACL/QoS, VRF/VXLAN |

IGP + ECMP; summarization; minimal policy |

|

Redundancy pattern |

Stacking/MC-LAG to access and northbound |

Dual sup/PSU, ISSU/NSF/SSO; chassis/virtualized |

|

Oversubscription |

Common; tune per zone |

Keep low; target near 1:1 |

|

Change cadence |

Frequent (policy-driven) |

Infrequent (baseline-driven) |

|

Key tables |

ACL TCAM, QoS queues, policy terms |

FIB scale, ECMP paths, MAC/ARP stability |

At the functional layer, check our differences between Layer 2 and Layer 3 switches

Design Checklist You Can Apply Today

- Keep L2 below aggregation; run L3 point-to-point + IGP/ECMP northbound.

- Place SVIs/gateways at aggregation; keep the core gateway-free unless policy requires otherwise.

- Dual-home access to two aggregation boxes; dual-home aggregation to two core boxes; use MC-LAG/stacking appropriately.

- In the campus IGP, summarize at the core and use ECMP; integrate BGP to DC/edge as needed.

- QoS: classify/priority at aggregation; keep core touch-light to avoid bottlenecks.

- Change management: core changes quarterly-level; aggregation changes more often—always stage and have rollback.

Sizing Math

• Fabric capacity ≈ sum of all port line-rates × 2 (full-duplex rule of thumb).

• pps ≈ bandwidth(Gbps) × 10^9 ÷ 672 (64-byte worst case).

• LAG capacity ≈ per-member bandwidth × member count (mind hashing/flows).

• Oversubscription ≈ total downlink (× concurrency factor) ÷ total uplink.

Common Pitfalls to Avoid

• Letting policy creep into the core—risk and complexity explode; push it back to aggregation.

• Relying on stacking at the core—software faults gain a huge blast radius; prefer chassis/dual-control designs.

• Allowing L2 to bleed into the core—loops/jitter can impact the entire campus.

• Skipping oversubscription math—expect congestion at peaks.

• Moving policy boundaries upward—aggregation gets lighter but the core becomes “dirty,” hurting future operations.

Collapsed-Core Note

Small/medium sites often adopt a collapsed core (aggregation + core in one platform). Balance high-speed backbone requirements with policy scale (port count, table sizes). Keep the same principles: policy where it belongs, transit kept clean.

FAQ

-

What is an aggregation switch vs a core switch?

An aggregation switch concentrates access traffic and enforces policy and inter-VLAN routing. A core switch provides high-speed, low-latency, highly available transit between aggregation blocks and to the DC/edge.

-

Can an aggregation switch replace a core switch?

Not in larger campuses. Aggregation gear can be feature-rich but the core requires higher density/throughput, lower oversubscription, and stricter HA.

-

Should default gateways live in aggregation or core?

Place them in aggregation. Keep the core policy-free whenever possible.

-

Do I keep Layer 2 in the core?

No. Keep L2 below aggregation and use L3 (IGP + ECMP) between aggregation and core for faster convergence and fault isolation.

-

How do I size uplinks between aggregation and core?

Apply a concurrency factor to downlinks, then check that total uplink bandwidth × pps capability can handle peak. Keep oversubscription low in the core.

-

When is a collapsed core appropriate?

In small/medium campuses where policy scale and backbone needs can be met on a single platform. Maintain the same separation of concerns logically.

Conclusion

When comparing aggregation switch vs core switch, follow one rule: push policy down to aggregation and reserve the core for fast, resilient transit. That principle produces cleaner designs, faster convergence, and easier day-2 operations—and scales from small collapsed cores to large multi-building campuses.

For management-focused differences, see Aggregation vs Core Switch guide

Cisco Core Switch: (Catalyst 9400/ Catalyst 9500 / Catalyst 9600)

Aggregation Switch: (Catalyst 9300)